Azure OpenAI¶

Azure OpenAI is a default AI Service Provider in WSO2 API Manager that provides access to OpenAI's language models through Azure's infrastructure. For more information about Azure OpenAI, see the Azure OpenAI Documentation.

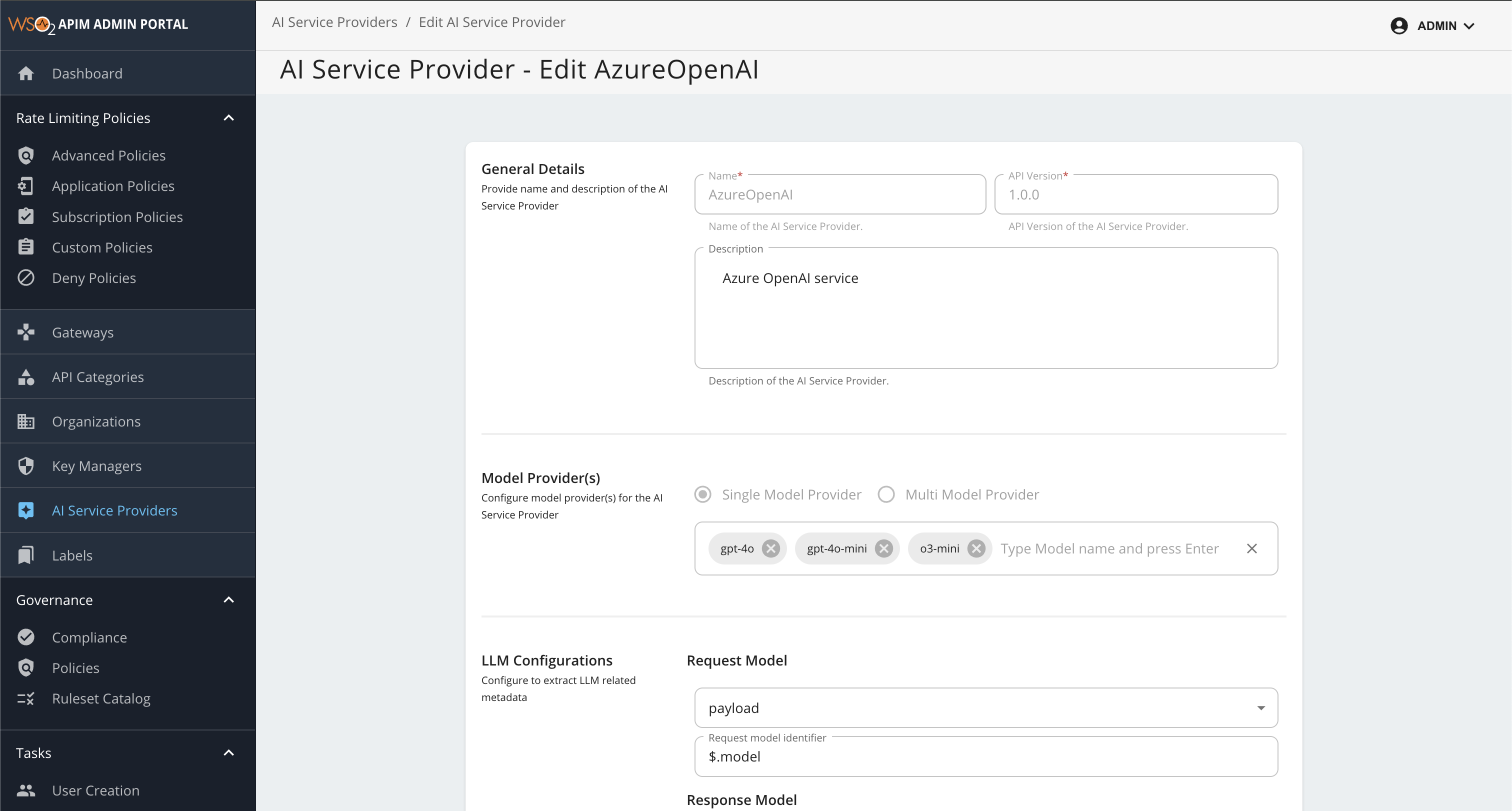

Configuring Azure OpenAI¶

Step 1: Access Configuration¶

- Login to the Admin Portal (

https://<hostname>:9443/admin) - Navigate to AI Service Providers → AzureOpenAI

Step 2: Configure Models¶

Read-Only Configurations

The following configurations are read-only and cannot be modified:

| Category | Fields |

|---|---|

| General Details |

• Name • API Version • Description |

| LLM Configurations |

• Request Model • Response Model • Prompt Token Count • Completion Token Count • Total Token Count • Remaining Token Count |

| LLM Provider Auth Configurations |

• Auth Type: Header, Query Parameter or Unsecured • Auth Type Identifier: Header/Query Parameter Identifier |

| Connector Type for AI Service Provider | • Connector Type |

Editable Configurations

The following configurations can be updated:

| Category | Description |

|---|---|

| API Definition | AI service provider exposed API definition file |

| Model List | Add the list of models supported by the AI service provider. This list enables you to configure routing strategies within your AI APIs. |

- By default, the following models are included:

gpt-4o,gpt-4o-mini, ando3-mini. - To add available models supported by Azure OpenAI, type the model name and press enter.

- This enables model-based load balancing and failover capabilities. For more details, see Multi-Model Routing Overview.

Step 3: Save Configuration¶

Click Update to apply your changes.

Once you have saved your changes, the updated AzureOpenAI configuration will be applied and made available for use in your AI APIs, enabling seamless integration with the selected models.